Listeners:

Top listeners:

-

play_arrow

RSU Radio Real College Radio

-

play_arrow

play_arrow

Urban Wilderness: Turkey Mountain Rocks!

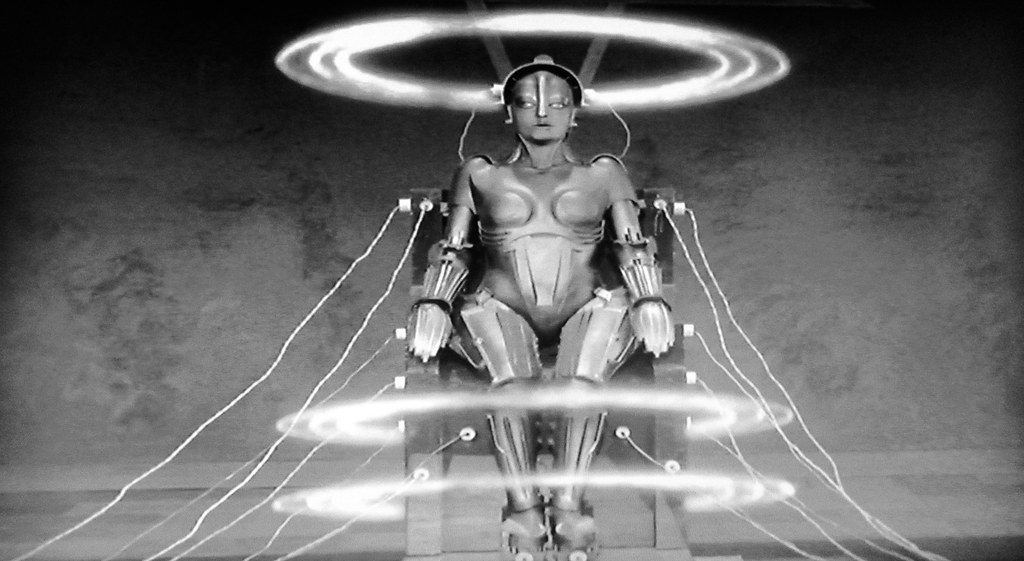

There’s an iconic scene in Fritz Lang’s 1927 film “Metropolis” in which the character of Maria is transformed into a robot, with her persona, conduct, and communication patterns being replicated onto a new, mechanical form. The robot is perceived by the public as a reincarnation of Maria, essentially restoring the identity that was lost during the transformation.

This is artificial intelligence.

Recently, various covers of iconic (and not so iconic) songs have been going viral online. Who knew Michael Jackson sang Bohemian Rhapsody? Or that, one fine morning, Ye decided to record Frank Sinatra’s Fly Me To The Moon and The Killers’ Mr. Brightside? Well, they didn’t. Sadly.

Online creatives have discovered a method of using artificial intelligence to extract vocals from music recordings. Users then upload the vocals into various software, train the computer on the sound of their voice samples, and what is generated proves to be scarily accurate…sometimes.

It’s usually bad and very funny.

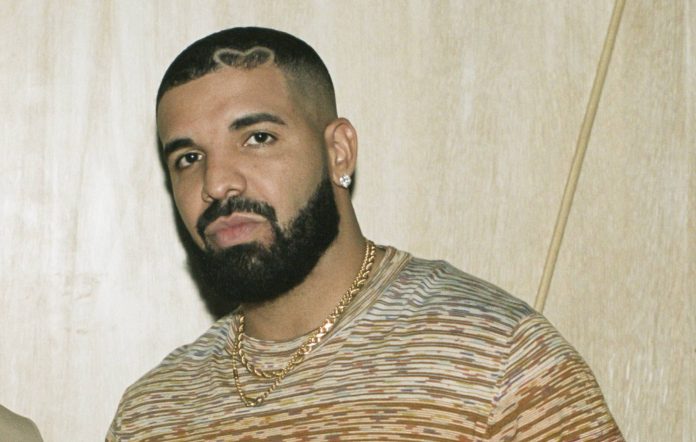

However, it is very quickly becoming much more serious. Heart on My Sleeve, a viral song written by a user by the name of Ghostwriter, combines the voices of Drake and The Weeknd, creating what, on paper, would be a certified hit. Some users, instead of simply using the AI over their own vocals, perform an impression of said artist, mimicking accents or phrases, and create indistinguishable covers, songs, and albums.

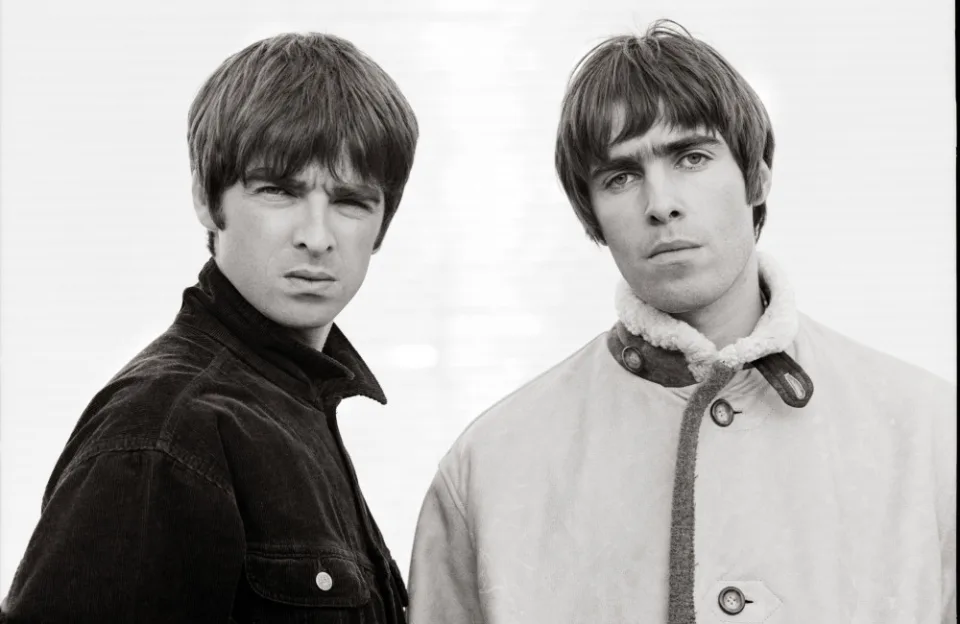

Entire bands have been created out of this phenomenon, including AISIS, a band that acts as if Oasis broke up in the 90’s and recently returned to the music scene. Liam and Noel remain significantly affected by AI, as users persist in generating content where Liam performs Noel’s solo tracks and vice versa (to shockingly good results).

Through my own research of YouTube’s AI covers, I have come to the understanding that the development of “models” (voice filters) necessitates the availability of clean source material. Consequently, artists like Oasis, who have not released official acapella tracks, are likely to produce less realistic results over artists like Ye, who created the STEM Player, and Michael Jackson, who released acapellas as b-sides to singles.

Songwriter Holly Herdon, who also studies AI, told the New York Times, “The question is, as a society, do we care what [the original musician] really feels or is it enough to just hear a superficially intelligent rendering? For some people that will not be enough. However, when you consider that most people listening to Spotify are doing so just to have something pleasant to listen to, it complicates things.”

Martin Clancy, who is on the chair of a global committee seeking to explore ethics of AI in art, questions, “What’s at stake are things we take for granted: listening to music made by humans, people doing that as a livelihood and it being recognized as a special skill.”

In contrast, some artists are merely amused. When shown AISIS, Liam Gallagher responded on Twitter saying he “sounds mega”, not questioning ethics or artistry of his voice being duplicated. Drake, after being sent a cover of “himself” singing Ice Spice, reposted the video to Instagram, with the cheeky caption, “This is the final straw AI.”

There are no current laws on AI in music, voice duplication, or art. That’s all being discussed right now. I believe it’s best we take the current lawless area as a study of both the power (and lack thereof) of artificial intelligence, but perhaps this is the next step to our very own “Metropolis”.

Tread carefully, but Kurt Cobain singing Weezer will always be funny.

Written by: Jace

ai drake kanye west Liam Gallagher Michael Jackson Music Music News noel gallagher Oasis The Weeknd ye